Archive

Top 5 Big Data Platform predictions for 2017

The Rise of Data Science Notebooks

Apache Zeppelin is a web-based notebook that enables interactive data analytics. You can make beautiful data-driven, interactive and collaborative documents with SQL, Scala or Python and more. However Apache Zeppelin is still an incubator project, I expect a serious boost of notebooks like Apache Zeppelin on top of data processing (like Apache Spark) and data storage (like HDFS, NoSQL and also RDBMS) solutions. Read more on my previous post.

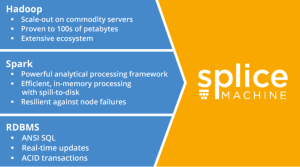

Splice Machine replace traditional RDBMSs

Splice Machine delivers an open-source database solution that incorporates the proven scalability of Hadoop, the standard ANSI SQL and ACID transactions of an RDBMS, and the in-memory performance of Apache Spark.

Machine Learning as a Service (MLaaS)

Machine Learning is the subfield of computer science that “gives computers the ability to learn without being explicitly programmed”. Within the field of data analytics, Machine Learning is a method used to devise complex models and algorithms that lend themselves to prediction; this is known as predictive analytics. The rise of Machine Learning as a Service (MLaaS) model is good news for the market, because it reduce the complexity and time required to implement Machine Learning and opens the doors to increase the adoption level. One of the companies that provide MLaaS is Microsoft with Azure ML.

Apache Spark on Kubernetes with Red Hat OpenShift

OpenShift is Red Hat‘s Platform-as-a-Service (PaaS) that allows developers to quickly develop, host, and scale applications in a cloud environment. OpenShift is a perfect platform for building data-driven applications with microservices. Apache Spark can be made natively aware of Kubernetes with OpenShift by implementing a Spark scheduler backend that can run Spark application Drivers and bare Executors in Kubernetes pods. See more on the OpenShift Commons Big Data SIG #2 blog.

MapR-FS instead of HDFS

If you’re familiar with the HDFS architecture, you’ll know about the NameNode concept, which is a separate server process that handles the locations of files within your clusters. MapR-FS doesn’t have such a concept, because all that information is embedded within all the data nodes, so it’s distributed across the cluster. The second architectural difference is the fact that MapR is written in native code and talks to directly to disk. HDFS (written in Java) runs in the JVM and then talk to a Linux file system before it talks to disks, so you have a few layers there that will impact performance and scalability. Read more differences in the MapR-FS vs. HDFS blog.

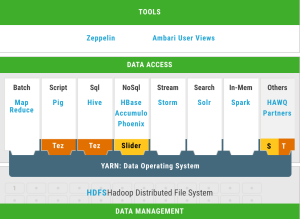

Apache Zeppelin “the notebook” on top of all the (Big) Data

Apache Zeppelin is a web-based notebook that enables interactive data analytics. You can make beautiful data-driven, interactive and collaborative documents with SQL, Scala or Python and more. However Apache Zeppelin is still an incubator project, I expect a serious boost of notebooks like Apache Zeppelin on top of data processing (like Apache Spark) and data storage (like HDFS, NoSQL and also RDBMS) solutions.

But is Apache Zeppelin covered in the current Hadoop distributions Cloudera, Hortonworks and MapR?

Cloudera is not covering Apache Zeppelin out of the box, but there is blog post how to install Apache Zeppelin on CDH. Hortonworks is covering Apache Zeppelin out of the box, see the picture of the HDP projects (well done Hortonworks). MapR is not covering Apache Zeppelin out of the box, but there is a blog post how to build Apache Zeppelin on MapR.

Is Apache Zeppelin covered by the greatest cloud providers Amazon AWS, Microsoft Azure and Google Cloud Platform?

We see that Amazon Web Services (AWS) has a Platform as a Service solution (PaaS) called Elastic Map Reduce (EMR). We see that since this summer Apache Zeppelin is supported on the EMR release page.

If we look at Microsoft Azure, there is a blog post how to start with Apache Zeppelin on the HD Insights Spark Cluster (this is a also a PaaS solution).

If we look at Google Cloud Platform we see a blog post to install Apache Zeppelin on top of Google BigQuery.

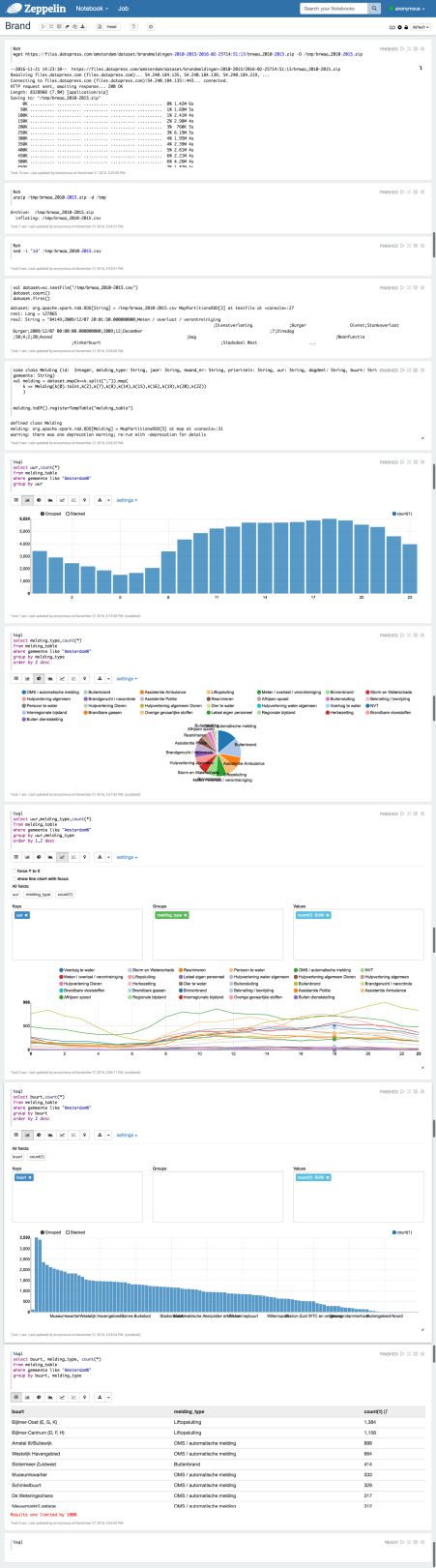

And now a short demo, lets do some data discovery with Apache Zeppelin on an open data set. For this case I use the Fire Report from the City of Amsterdam from 2010 – 2015.

If you want a short intro look first at this short video of Apache Zeppelin (overview).

I use of course docker to start a Zeppelin container. I found an image in the docker hub from Dylan Meissner (thx). Run the docker container to enter the command below:

$ docker run -d -p 8080:8080 dylanmei/zeppelin

Look in the browser at dockerhost:8080 and create a new notebook:

Step 1: Load and unzip the dataset (I use the “shell” interpreter)

%sh wget https://files.datapress.com/amsterdam/dataset/brandmeldingen-2010-2015/2016-02-25T14:51:13/brwaa_2010-2015.zip -O /tmp/brwaa_2010-2015.zip

%sh unzip /tmp/brwaa_2010-2015.zip -d /tmp

Step 2: Clean the data, in this case remove the header

%sh sed -i '1d' /tmp/brwaa_2010-2015.csv

Step 3: Put data into HDFS

%sh hadoop fs -put /tmp/brwaa_2010-2015.csv /tmp

Step 4: Load the data (most import fields) via a class and use the map function (default Scala)

val dataset=sc.textFile("/tmp/brwaa_2010-2015.csv")

case class Melding (id: Integer, melding_type: String, jaar: String, maand_nr: String, prioriteit: String, uur: String, dagdeel: String, buurt: String, wijk: String, gemeente: String)

val melding = dataset.map(k=>k.split(";")).map(

k => Melding(k(0).toInt,k(2),k(7),k(8),k(14),k(15),k(16),k(19),k(20),k(22))

)

melding.toDF().registerTempTable("melding_table")

Step 5: Use Spark SQL to run the first query

%sql select count(*) from melding_table

Below you can see some more queries and charts:

Next step is how to predict fire with help of Spark ML.

Install single node Hadoop on CentOS 7 in 5 simple steps

First install CentOS 7 (minimal) (CentOS-7.0-1406-x86_64-DVD.iso)

I have download the CentOS 7 ISO here

### Vagrant Box

You can use my vagrant box voor a default CentOS 7, if you are using virtual box

$ vagrant init malderhout/centos7 $ vagrant up $ vagrant ssh

### Be aware that you add the hostname “centos7” in the /etc/hosts

127.0.0.1 centos7 localhost localhost.localdomain localhost4 localhost4.localdomain4

### Add port forwarding to the Vagrantfile located on the host machine. for example:

config.vm.network “forwarded_port”, guest: 50070, host: 50070

### If not root, start with root

$ sudo su

### Install wget, we use this later to obtain the Hadoop tarball

$ yum install wget

### Disable the firewall (not needed if you use the vagrant box)

$ systemctl stop firewalld

We install Hadoop in 5 simple steps:

1) Install Java

2) Install Hadoop

3) Configurate Hadoop

4) Start Hadoop

5) Test Hadoop

1) Install Java

### install OpenJDK Runtime Environment (Java SE 7)

$ yum install java-1.7.0-openjdk

2) Install Hadoop

### create hadoop user

$ useradd hadoop

### login to hadoop

$ su - hadoop

### generating SSH Key

$ ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

### authorize the key

$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

### set chmod

$ chmod 0600 ~/.ssh/authorized_keys

### verify key works / check no password is needed

$ ssh localhost $ exit

### download and install hadoop tarball from apache in the hadoop $HOME directory

$ wget http://apache.claz.org/hadoop/common/hadoop-2.5.0/hadoop-2.5.0.tar.gz $ tar xzf hadoop-2.5.0.tar.gz

3) Configurate Hadoop

### Setup Environment Variables. Add the following lines to the .bashrc

export JAVA_HOME=/usr/lib/jvm/jre

export HADOOP_HOME=/home/hadoop/hadoop-2.5.0

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

export JAVA_LIBRARY_PATH=$HADOOP_HOME/lib/native:$JAVA_LIBRARY_PATH

### initiate variables

$ source $HOME/.bashrc

### Put the property info below between the “configuration” tags for each file tags for each file

### Edit $HADOOP_HOME/etc/hadoop/core-site.xml

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

### Edit $HADOOP_HOME/etc/hadoop/hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>file:///home/hadoop/hadoopdata/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:///home/hadoop/hadoopdata/hdfs/datanode</value>

</property>

### copy template

$ cp $HADOOP_HOME/etc/hadoop/mapred-site.xml.template $HADOOP_HOME/etc/hadoop/mapred-site.xml

### Edit $HADOOP_HOME/etc/hadoop/mapred-site.xml

<property> <name>mapreduce.framework.name</name> <value>yarn</value> </property>

### Edit $HADOOP_HOME/etc/hadoop/yarn-site.xml

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

### set JAVA_HOME

### Edit $HADOOP_HOME/etc/hadoop/hadoop-env.sh and add the following line

export JAVA_HOME=/usr/lib/jvm/jre

4) Start Hadoop

# format namenode to keep the metadata related to datanodes

$ hdfs namenode -format

# run start-dfs.sh script

$ start-dfs.sh

# check that HDFS is running

# check there are 3 java processes:

# namenode

# secondarynamenode

# datanode

$ start-yarn.sh

# check there are 2 more java processes:

# resourcemananger

# nodemanager

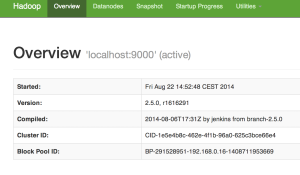

5) Test Hadoop

### access hadoop via the browser on port 50070

### put a file

$ hdfs dfs -mkdir /user $ hdfs dfs -mkdir /user/hadoop $ hdfs dfs -put /var/log/boot.log

### check in your browser if the file is available

Works!!! See also https://github.com/malderhout/hadoop-centos7-ansible