Install single node Hadoop on CentOS 7 in 5 simple steps

First install CentOS 7 (minimal) (CentOS-7.0-1406-x86_64-DVD.iso)

I have download the CentOS 7 ISO here

### Vagrant Box

You can use my vagrant box voor a default CentOS 7, if you are using virtual box

$ vagrant init malderhout/centos7 $ vagrant up $ vagrant ssh

### Be aware that you add the hostname “centos7” in the /etc/hosts

127.0.0.1 centos7 localhost localhost.localdomain localhost4 localhost4.localdomain4

### Add port forwarding to the Vagrantfile located on the host machine. for example:

config.vm.network “forwarded_port”, guest: 50070, host: 50070

### If not root, start with root

$ sudo su

### Install wget, we use this later to obtain the Hadoop tarball

$ yum install wget

### Disable the firewall (not needed if you use the vagrant box)

$ systemctl stop firewalld

We install Hadoop in 5 simple steps:

1) Install Java

2) Install Hadoop

3) Configurate Hadoop

4) Start Hadoop

5) Test Hadoop

1) Install Java

### install OpenJDK Runtime Environment (Java SE 7)

$ yum install java-1.7.0-openjdk

2) Install Hadoop

### create hadoop user

$ useradd hadoop

### login to hadoop

$ su - hadoop

### generating SSH Key

$ ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa

### authorize the key

$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

### set chmod

$ chmod 0600 ~/.ssh/authorized_keys

### verify key works / check no password is needed

$ ssh localhost $ exit

### download and install hadoop tarball from apache in the hadoop $HOME directory

$ wget http://apache.claz.org/hadoop/common/hadoop-2.5.0/hadoop-2.5.0.tar.gz $ tar xzf hadoop-2.5.0.tar.gz

3) Configurate Hadoop

### Setup Environment Variables. Add the following lines to the .bashrc

export JAVA_HOME=/usr/lib/jvm/jre

export HADOOP_HOME=/home/hadoop/hadoop-2.5.0

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

export JAVA_LIBRARY_PATH=$HADOOP_HOME/lib/native:$JAVA_LIBRARY_PATH

### initiate variables

$ source $HOME/.bashrc

### Put the property info below between the “configuration” tags for each file tags for each file

### Edit $HADOOP_HOME/etc/hadoop/core-site.xml

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

### Edit $HADOOP_HOME/etc/hadoop/hdfs-site.xml

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>file:///home/hadoop/hadoopdata/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:///home/hadoop/hadoopdata/hdfs/datanode</value>

</property>

### copy template

$ cp $HADOOP_HOME/etc/hadoop/mapred-site.xml.template $HADOOP_HOME/etc/hadoop/mapred-site.xml

### Edit $HADOOP_HOME/etc/hadoop/mapred-site.xml

<property> <name>mapreduce.framework.name</name> <value>yarn</value> </property>

### Edit $HADOOP_HOME/etc/hadoop/yarn-site.xml

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

### set JAVA_HOME

### Edit $HADOOP_HOME/etc/hadoop/hadoop-env.sh and add the following line

export JAVA_HOME=/usr/lib/jvm/jre

4) Start Hadoop

# format namenode to keep the metadata related to datanodes

$ hdfs namenode -format

# run start-dfs.sh script

$ start-dfs.sh

# check that HDFS is running

# check there are 3 java processes:

# namenode

# secondarynamenode

# datanode

$ start-yarn.sh

# check there are 2 more java processes:

# resourcemananger

# nodemanager

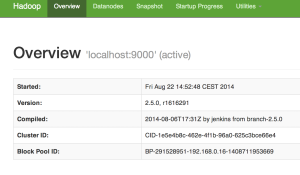

5) Test Hadoop

### access hadoop via the browser on port 50070

### put a file

$ hdfs dfs -mkdir /user $ hdfs dfs -mkdir /user/hadoop $ hdfs dfs -put /var/log/boot.log

### check in your browser if the file is available

Works!!! See also https://github.com/malderhout/hadoop-centos7-ansible

YARN_HOME must be replace by HADOOP_YARN_HOME!

Thx done!

Thanks for the instructions! I got it installed and working. 🙂

Great tutorial!

jps command not working. Any work around for that.

you can try “ps aux | grep java” to see the running java processes

Hello – do I need to set the classpath somewhere? When I try ‘hdfs namenode -format’ I get a class not found: org/apache/hadoop/security/authorize/RefreshAuthorizationPolicyProtocol. I’ve checked that this class is in the hadoop common jar…I am using hadoop-2.7.1.

Did you install the OS and Java correctly???

Any help would be appreciated. Kate

Hi Kate. Did you install the OS and Java correctly???

I believe that I did. Using Centos 7, and openJDK 1.7.0_85.

And the Hadoop version 2.5.0 is a bit old. Which version did you download?

I downloaded 2.7.1 which is the latest stable.

I tested 2.7.1 although in this blog 2.5.0 is used. Hadoop 2.7.1 works! I tested it with the vagrant box. Check the HADOOP_HOME en enter the right version. Also enter the JAVA_HOME in $HADOOP_HOME/etc/hadoop/hadoop-env.sh. Hope that it will work for you

Thank everyone for their help: issue resolved. In the .bashrc, I had an extra = in the HADOOP_COMMON_HOME environment variable definition and I created the directories referenced in the hdfs-site.xml file.

Awesome tutorial. Saved me a lot of time in getting started!

i am trying to install hadoop on CentOS minimal version (only Command line), and I am unable to locate the .bashrc file.

Can you help me in editing the .bashrc?

Hi Aarti,

Check in the $HOME dir if .bashrc exists by:

ls -a .bashrc

To edit I use vi

vi .bashrc

Hope that this will work for you.

Thanks, it is running smoothly inside hadoop VM, but if I use my local Mac to access this hadoop server, hdfs dfs -ls hdfs://hadoop-vm:9000/, I then got connection refused error. Do you know how to enable external request?

Thanks

Hi Frank,

Great to hear that it works.

Try to add:

config.vm.network “forwarded_port”, guest: 9000, host: 9000

in the Vagrantfile

Greets,

Maikel

Sorry Malderhou, I am not using Vagrant, just plain centos7, do you know how to handle such situation in plain centos7 installation?

Hi Frank,

OK clear. Try:

iptables -A INPUT -p tcp –dport 9000 -j ACCEPT

Look for more info at https://community.rackspace.com/products/f/25/t/4504

Hoop that this solve the issue

Greets,

Maikel

Thanks, I figured that I have to user ip in hadoop configuration core-site.xml, I was using hdfs://localhost:9000, it only worked in local VM, but after I changed this to hdfs://xxx.xxx.xxx.xx:9000, then it worked and can accept remote access. Thanks

I am trying to get a Single node on a local machine. I got everything working except “hdfs dfs -put /var/log/boot.log”. I am able to open up the browser, and see the hadoop folder. But the folder is empty too. For the command “hdfs dfs -put /var/log/boot.log” I see the warning “put: File /user/hadoop/boot.log._COPYING_ could only be replicated to 0 nodes instead of minReplication (=1). There are 0 datanode(s) running and no node(s) are excluded in this operation.”

Any help/guidance/tip would be awesome

I’ve followed your instructions and all went well until I reached this:

[hadoop@mysqldemo ~]$ hdfs dfs -mkdir /user

mkdir: Cannot create directory /user. Name node is in safe mode.

[hadoop@mysqldemo ~]$ hdfs dfsadmin -safemode leave

Safe mode is OFF

[hadoop@mysqldemo ~]$ hdfs dfs -mkdir /user

mkdir: Cannot create directory /user. Name node is in safe mode.

[hadoop@mysqldemo ~]$

What am I doing wrong?

Please let me know.

I found my issue my JDK was not installed correctly. Once I got that fixed, all is well.

i have installed vagrant and cantos and oracle vbox

but im getting the (ssh error)

now i want to install hadoop can anyone help me

Thank’s! I got it installed and working.

Nice article. Simple and comprehensive. I got it installed and successfully running hadoop single node cluster.

Hello Maikel,

Thanks for this wonderful tutorial. It worked fab. However i wanted some more info about all the configuration xml files. What is the use of each one and all the important properties of them.

Do you know where would i get all these info in detail? Or else if you could explain in brief. Any help is highly appreciated.

Thanks,

David.

Hi David,

Thanks for the reply.

Look at https://hadoop.apache.org/docs/r2.5.2/

At the bottom of the page you see the configuration option.

You can also look at the book “Hadoop: The Definitive Guide”. Is this book there is chapter how you manage all the different configuration files.

Hope you can do something with this info

http://dhjsdhv2667226ll.com

awesome

Concise tutorial. Thanks

Hi, Thanks for sharing a nice blog posting…

More: https://www.kellytechno.com/Online/Course/Hadoop-Training

Hadoop Online Training