Archive

Stream and analyse Tweets with the ELK / Docker stack in 3 simple steps

There are a lot of possibilities with Big Data tools on the today’s market. For example if we want to stream and analyse some tweets there are several ways to do this. For example:

- With the Hadoop ecosystem Flume, Spark, Hive etc. and present it for example with Oracle Big Data Discovery.

- With the Microsoft Stack from Azure (Hadoop) and with Power BI to Excel.

- Or with this blog with the ELK stack (see Elastic) with the help of the Docker ecosystem

- and of course al lot more solutions …

What is the ELK stack? ELK stands for Elasticsearch (Search & Analyze Data in Real Time), Logstash (Process Any Data, From Any Source) and Kibana (Explore & Visualize Your Data). More in info at Elastic.

Most of you know Docker already. Docker allows you to package an application with all of its dependencies into a standarized unit for software development. And … Docker will be more and more import with all kind of Open Source Big Data solutions.

Let’s go for it!

Here are the 3 simple steps:

- Step 1: Install and test Elasticsearch

- Step 2: Install and test Logstash

- Step 3: Install and test Kibana

For now you need only a dockerhost. I use Docker Toolbox.

If you don’t have a virtual machine, create one. For example:

$ docker-machine create --driver virtualbox --virtualbox-disk-size "40000" dev

Logon to the dockerhost (in my case Docker Toolbox)

$ docker-machine ssh dev

Step 1: Install and test Elasticsearch

Install:

docker run --name elasticsearch -p 9200:9200 -d elasticsearch --network.host _non_loopback_

The –network.host _non_loopback_ option must be added from elasticsearch 2.0 to handle localhost

Test:

curl http://localhost:9200

You must see something like:

{

"status" : 200,

"name" : "Dragonfly",

"cluster_name" : "elasticsearch",

"version" : {

"number" : "1.7.0",

"build_hash" : "929b9739cae115e73c346cb5f9a6f24ba735a743",

"build_timestamp" : "2015-07-16T14:31:07Z",

"build_snapshot" : false,

"lucene_version" : "4.10.4"

},

"tagline" : "You Know, for Search"

}

Step 2: Install and test Logstash

Make the following “config.conf” file for streaming tweets to elasticsearch. Fill in your twitter credentials and the docker host ip. For this example we search all the tweets with the keyword “elasticsearch”. For now we do not use a filter.

input {

twitter {

consumer_key => ""

consumer_secret => ""

oauth_token => ""

oauth_token_secret => ""

keywords => [ "elasticsearch" ]

full_tweet => true

}

}

filter {

}

output {

stdout { codec => dots }

elasticsearch {

protocol => "http"

host => "<Docker Host IP>"

index => "twitter"

document_type => "tweet"

}

}

Install:

docker run --name logstash -it --rm -v "$PWD":/config logstash logstash -f /config/config.conf

Test (if everything went well) you see:

Logstash startup completed ...........

Step 3: Install and test Kibana

Install and link with the elasticsearch container:

docker run --name kibana --link elasticsearch:elasticsearch -p 5601:5601 -d kibana

Test:

curl http://localhost:5601

You must see something like a html page. Go to a browser and see the Kibana 4 user interface.

Start to go to settings tab and enter the new index name “twitter”

Go to the discover tab and you see something like this (after 60 minutes):

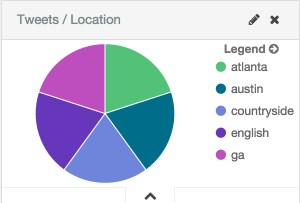

I also make a pie chart (Tweets per Location) like this:

And there are a lot of more possibilities with the ELK stack. Enjoy!

More info: